Large Language Models: The Future of AI Text Generation

- July 8, 2023

- Posted by: Kulbir Singh

- Category: Artificial Intelligence Machine Learning

Large Language Models: The Future of AI Text Generation

Artificial Intelligence (AI) has made significant advances in recent years, with advancements in machine learning and deep learning leading the charge. One of the most exciting developments in this field is the “large language models”. You have must heard about chatgpt and google bard , these are the examples of Large language models. These models, trained on large amounts of text data, have the ability to generate human-like text, opening up a world of possibilities in various applications such as chatbots, content generation, and more.

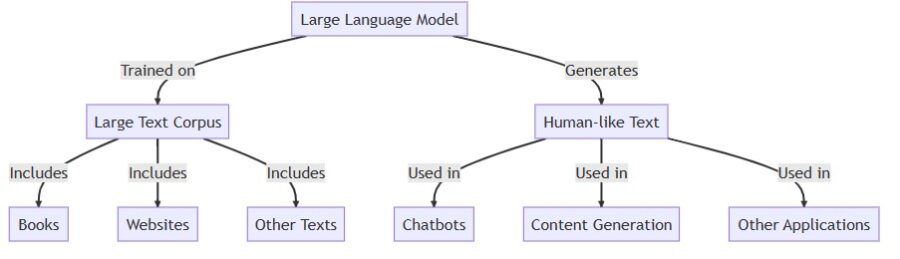

What is a Large Language Model?

A large language model is a type of AI model that has been trained on a large amount of data as the name suggest itself. This text data, also known as a corpus, can include a wide variety of sources such as books, websites, and other texts. The model learns from this data, picking up on patterns, structures, and nuances in the language. It then uses this knowledge to generate human-like text that is contextually relevant and grammatically accurate. The model that powers ChatGPT (GPT3.0), has been trained on hundreds of gigabytes of text data.

Large language models are a subset of machine learning models known as sequence models or recurrent neural networks (RNNs). These models are designed to handle sequential data, where the order of the data points matters. In the case of language, the sequence of words in a sentence is crucial for understanding its meaning.

Applications of Large Language Models

The importance of large language models is their ability to understand and generate human-like text. There are different applications for large language models:

- Chatbots: Chatbots can be powered by large language models, which allow them to interpret user inquiries and answer in a natural, human-like manner. This can dramatically improve the user experience by making interactions with the chatbot feel more like a human discussion. Most of the chat systems now a day use this feature where no human is talking while virtual assistance replies based upon large language models only.

- Content Generation: These models can be used to create content as well. Large language models can produce high-quality, human-like content that is indistinguishable from material authored by a human, whether it is writing articles, creating social media postings, or generating product descriptions.

- Other Applications: There are a lot of applications for large language models. They can be used in areas such as translation, summarization, sentiment analysis, and more. The ability to understand and generate human-like text makes these models incredibly versatile and valuable.

How Do Large Language Models Work?

Large language models are trained using a machine learning technique called deep learning. Deep learning models learn to make predictions by being exposed to large amounts of data and adjusting their internal parameters to minimize the difference between their predictions and the actual outcomes.

In the case of large language models, the data they are trained on is text. The model learns to predict the next word in a sentence based on the words it has seen so far. It does this by learning the statistical relationships between words in the training data.

For example, if the model often sees the word “rain” following the words “The sky is”, it will learn that “rain” is a likely prediction after this sequence of words. Over time, and with enough data, the model can learn to make highly accurate predictions that take into account the context of the sentence, the grammar of the language, and even some of the subtleties and nuances of the language.

Training Large Language Models

- Data Collection: Gathering a huge amount of text data is the initial stage in training a large language model. This data serves as the model’s training material. Information can originate from a variety of sources, including books, websites, and other texts, and should ideally cover a wide range of themes to ensure the model learns a diverse vocabulary and context comprehension.

- Preprocessing: After collecting the data, it must be preprocessed. This entails cleaning the data and turning it into a format that the model can recognize. Tokenization, the process of dividing down text into smaller components, such as words or sub words, is frequently used in language models.

- Model Architecture Selection: The next step is to choose the architecture of the neural network. Large language models typically use a type of model called a transformer, which is particularly good at handling sequential data and understanding context. The architecture determines how the model processes information and learns from it.

- Training: Once the data has been prepared and the model architecture has been chosen, the actual training may begin. This involves running the data through the model and modifying the parameters based on the model’s predictions. The goal is to reduce the gap between the model’s predictions and the actual results. This is often accomplished through the use of backpropagation and an optimization algorithm such as stochastic gradient descent.

- Assessment and fine-tuning: Following the first training, the model is tested on a different set of data (the validation set) to determine how well it works. At this point, it’s customary for the model to be fine-tuned, which includes extra training on a more specialized task or dataset to increase its performance in that area.

It should be noted that training and validation large language models needs substantial computer resources as well as experience in machine learning and natural language processing. It’s also a highly iterative procedure that involves a lot of trial and error.

Conclusion

Large language models are a big step forward in the field of artificial intelligence. Their ability to interpret and generate human-like text has the potential to transform a variety of industries, from chatbots to content development and beyond.

A Large Language Model (LLM) is a type of deep learning model trained on massive text datasets to understand and generate human language.

Humans and artificial intelligence (AI) have been contrasted and compared frequently.Some worry that AI will drive people out of many professions, while others argue that AI will never fully replace human intelligence and creativity.

Autonomous vehicles, also known as self-driving cars, are like smart robots that can drive themselves without a human driver.